State of AI with Google AI Live 2025

Contents

Last month, I attended the Google AI Live conference. Since I’ve been building some exciting AI agent projects with Google, it was a great chance to see what’s new in their ecosystem and how the broader AI landscape is evolving.

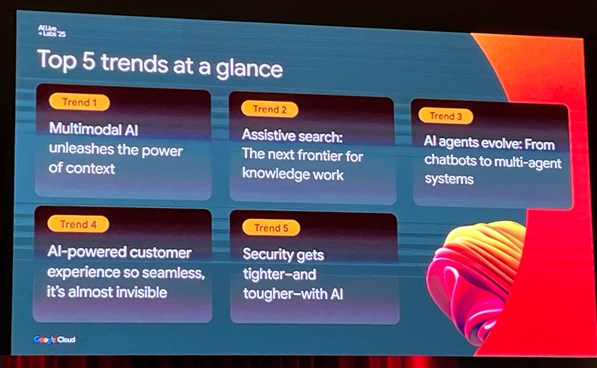

Key Trends in Google’s AI Outlook

Google released a comprehensive AI Trends Report, highlighting several shifts in the industry. Two trends in particular stood out:

1. Multimodal AI: Unlocking Contextual Understanding

LLMs are moving beyond text to integrate image, video, and other modalities. This opens up new opportunities, especially in media, advertising, and creative industries. One example shared was a telco using real-time video chat with AI to troubleshoot home internet issues—blending context, vision, and language into a more intuitive support experience.

2. AI Agents: From Chatbots to Collaborative Systems

We’re seeing a shift from single-purpose bots to multi-agent systems that can access tools, use specialized data, and coordinate tasks. This evolution is pushing AI closer to autonomous workflows, powered by frameworks that manage orchestration among agents.

Customer Panel: Real Use Cases from Optus and Heidi Health

The panel brought together two Google Cloud customers—Optus (an enterprise) and Heidi Health (a startup)—to share how they’re using AI in production.

Optus is applying AI in classic customer service use cases. With millions of support tickets, AI enables smarter self-serve flows powered by FAQ knowledge. Internally, they’re also using AI to index and surface company knowledge more efficiently through search.

Heidi Health is a startup building AI medical scribes. Their system listens to doctor-patient consultations and generates summaries, billing info, follow-ups, and even language translations. They rely heavily on Gemini, primarily due to its cost-efficiency, quality, and low latency.

One insight that really resonated with me came from Heidi Health’s CEO, Thomas. He emphasised that they’re not building “AI for the sake of AI”—they’re solving real workflow inefficiencies in healthcare. AI is a tool, not the product. That kind of clarity is refreshing.

Technical Insights: Working with Gemini

Here’s where things got more technical—through side discussions with the Google team, I picked up some useful tips on using Gemini effectively.

Closing the Final 10%

A common challenge with using third-party models is you can’t fine-tune them directly. If the model performs well 90% of the time, how do you close that gap?

One way is through prompt engineering—feeding high-quality examples to guide the model. But when conversations are too open-ended to enumerate all cases, Gemini’s large context window (over a million tokens) becomes essential.

To avoid model confusion from too much context, a Retrieval-Augmented Generation (RAG) pattern helps—fetching relevant examples dynamically from a stored library.

Mixture of Experts for Output Diversity

Another approach is to generate multiple responses using different model “expert” variations. Then you apply chain-of-thought or even another LLM to evaluate and select the best one. This ensemble-like strategy mirrors human collaboration—diversity often leads to stronger results.

Hands-on: Building with the Agent Development Kit (ADK)

The rest of the day was hands-on with Google’s Agent Development Kit (ADK). I’ve been building agentic AI systems with it since its release, and it’s an impressive toolkit for anyone serious about AI automation. Definitely worth a look if you’re exploring multi-agent workflows or intelligent task orchestration.

💬 Comments & Discussion